News - AI Misinformation Sparks Racial Tensions and Political Impact

Business Strategy

AI Misinformation Sparks Racial Tensions and Political Impact

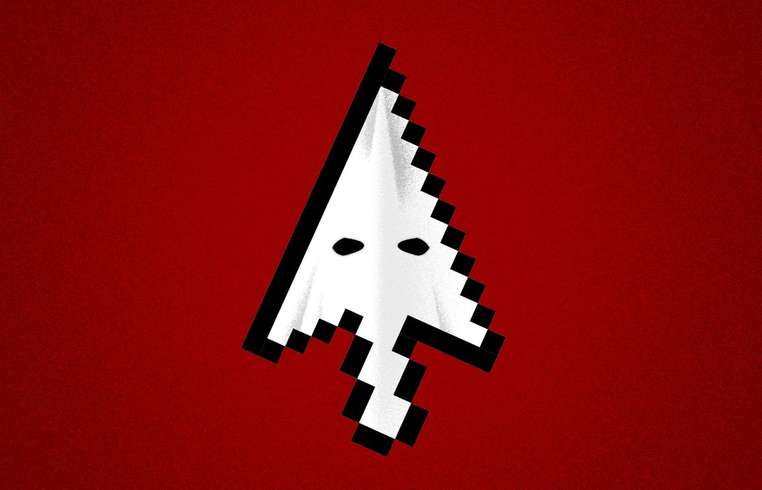

In the evolving landscape of artificial intelligence, the creation and dissemination of AI-generated videos have become alarmingly seamless and convincing. This ease of fabrication, utilizing tools like OpenAI's Sora and Google's VEO 3, is fueling the proliferation of artificial content, which is increasingly hard to distinguish from authentic media. These advancements have birthed a new digital phenomenon akin to 'blackface,' where non-black individuals create black or brown persona videos to accrue social currency such as likes, shares, and in some instances, monetary gain. This trend has unsettling implications, particularly as social media platforms incentivize content creation through revenue from viewership. The insidiousness of these AI-generated videos lies not only in their deceptive nature but also in their potential to perpetuate racial stereotypes and influence public perception. A series of fraudulent videos featuring purported Black women discussing the abuse of SNAP benefits during a government shutdown sparked a wave of vitriol online. These videos perpetuated the harmful stereotype of Black women as 'welfare queens,' a trope that regrettably led some users to rally against the SNAP program itself. Remarkably, statistical data counters this narrative with the fact that the majority of SNAP recipients are non-Hispanic white individuals. Yet, the allure of content that seemingly validates preconceived biases often leads users to overlook facts in favor of emotional resonance. This dynamic underscores the potent influence of misinformation on social platforms. Michael Huggins from the racial justice group Color of Change highlighted the psychological impact of such fabricated content. Even when viewers identify content as false, its negative connotations can inadvertently seep into collective mindsets, shaping attitudes towards real-world issues, including political elections. While some tech companies are making strides to mitigate the spread of manipulative content by incorporating policies against hate speech and misinformation, there remains room for improvement. OpenAI and Google have instituted various safeguards, such as banning slurs and implementing digital watermarks to signal AI-generated content. Nonetheless, the reach and impact of these videos continue to present significant challenges. According to organizational psychologist Janice Gassam Asare, the frivolity with which such content is often consumed is precisely what makes it so pernicious. She urges a discernment among social media consumers, encouraging critical evaluations of content authenticity. This ongoing battle against AI-driven misinformation speaks to broader trends in digital transformation and socio-political dynamics, underscoring the urgency for increased awareness and platform accountability to safeguard societal values and democratic processes.